Investigation of Algorithms for Retrieving Similar Images

Table of Contents

Abstract

Automating the task of searching for similar images of fabric is crucial to improve efficiency in the fabric-related industry. Three algorithms were investigated in this project: per-pixel measures ($L_2$), K-Nearest Neighbors (KNN), and perceptual similarity (LPIPS). While LPIPS provides a better way to retrieve similar fabric as perceived by humans, its computational expense becomes a main obstacle when the number of images increases.

1. Introduction

Searching for similar images of fabric is a common task in the fabric-related industry. For instance, buyers may want to purchase fabrics similar to previous best-selling ones, while designers may want to experiment with different yet similar fabrics during the product design process.

Although humans can recognize similar images effortlessly, traditionally, this task has relied on human search through all available fabrics to find a similar one, which is time-consuming and exhausting. Automating this procedure is crucial to improve efficiency.

2. Search algorithm

Since we are searching through all images, we will use the notation $N$ to represent the total number of images in the following discussion.

2.1. Per-pixel measures ($L_2$ distance)

One of the classic methods for comparing images is to calculate the difference of every pixel using the $L_2$ distance. The time complexity is $O(N^2 * C * W * H)$, where $C$ represents the number of channels in the image, and $W$ and $H$ represent the width and height of the image, respectively. The space complexity is $O(N^2)$ because we need to store all the distances between the images.

2.2. K-Nearest Neighbors (KNN)

Rather than comparing the images directly, we can extract features from the image, such as the main color, color schemes, pattern size, and so on. Similarity can be defined as the $L_2$ distance between features. However, as the number of features increases, all samples may appear dissimilar, which is often referred to as the “curse of dimensionality”. To overcome this problem, we can reduce the dimensionality using PCA.

The time complexity is $O(N^2 * K)$, where $K$ represents the number of principal components from PCA. The space complexity remains $O(N^2)$ since we store all distances.

2.3. Perceptual similarity (LPIPS)

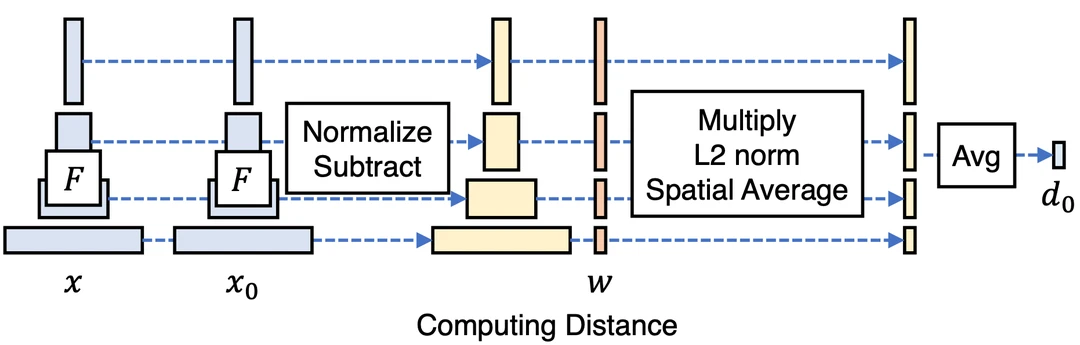

This method, which was published by Zhang ( Citation: Zhang, Isola & al., 2018 Zhang, R., Isola, P., Efros, A., Shechtman, E. & Wang, O. (2018). The unreasonable effectiveness of deep features as a perceptual metric. Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595. ) as shown in the Fig. 1, extracts feature maps from activation layers and calculates the weighted distance $d_0$ between those feature maps. To apply this method, we can push images $x$ and $x_0$ through a pre-trained network (such as AlexNet ( Citation: Krizhevsky, 2014 Krizhevsky, A. (2014). One weird trick for parallelizing convolutional neural networks. arXiv preprint arXiv:1404.5997. ) ), denoted as $F$.

Feature maps are extracted by passing images $x$ and $x_0$ through a pre-trained network $F$. The distance is computed by subtracting the feature maps and then calculating the spatial average of the $L_2$ norm.

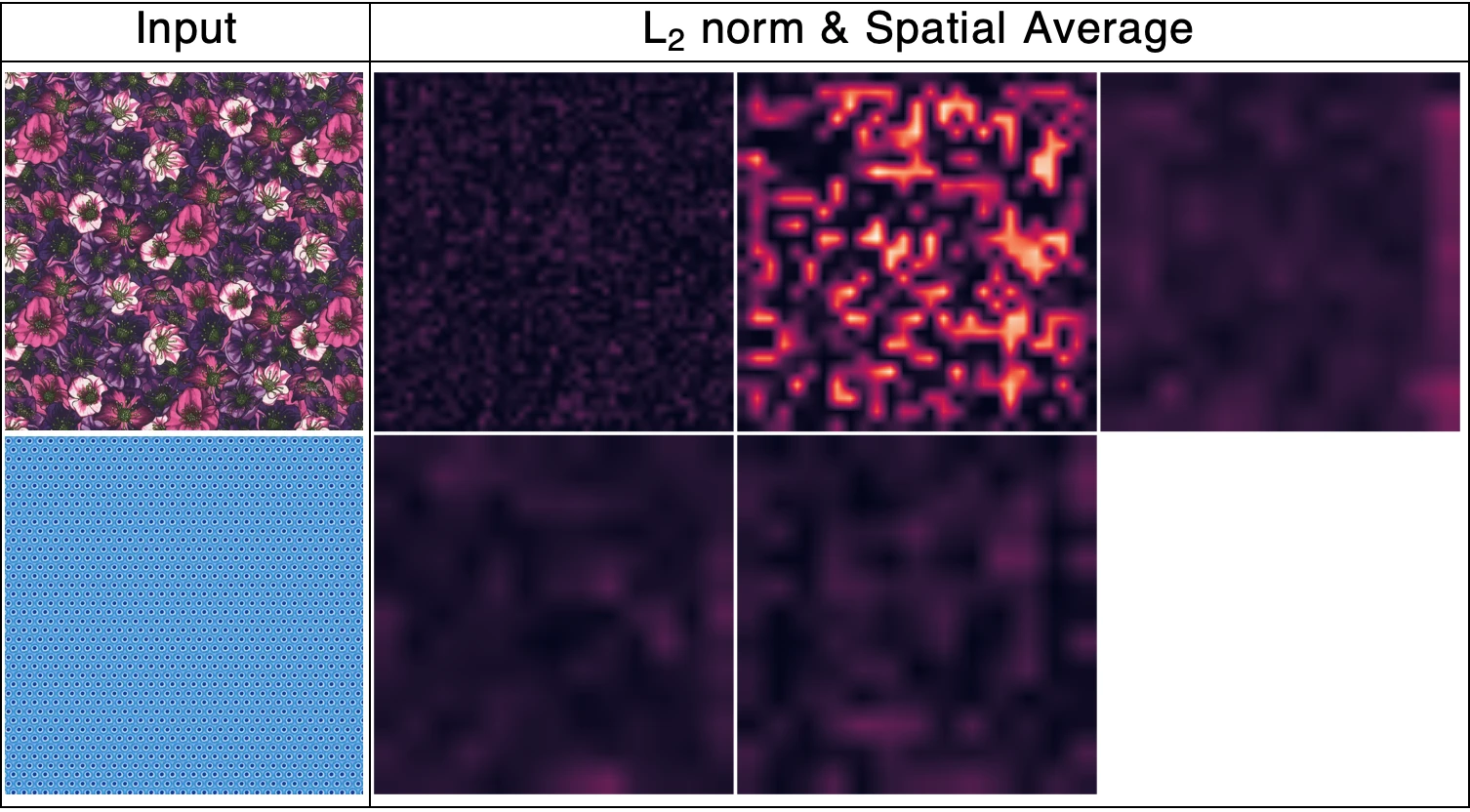

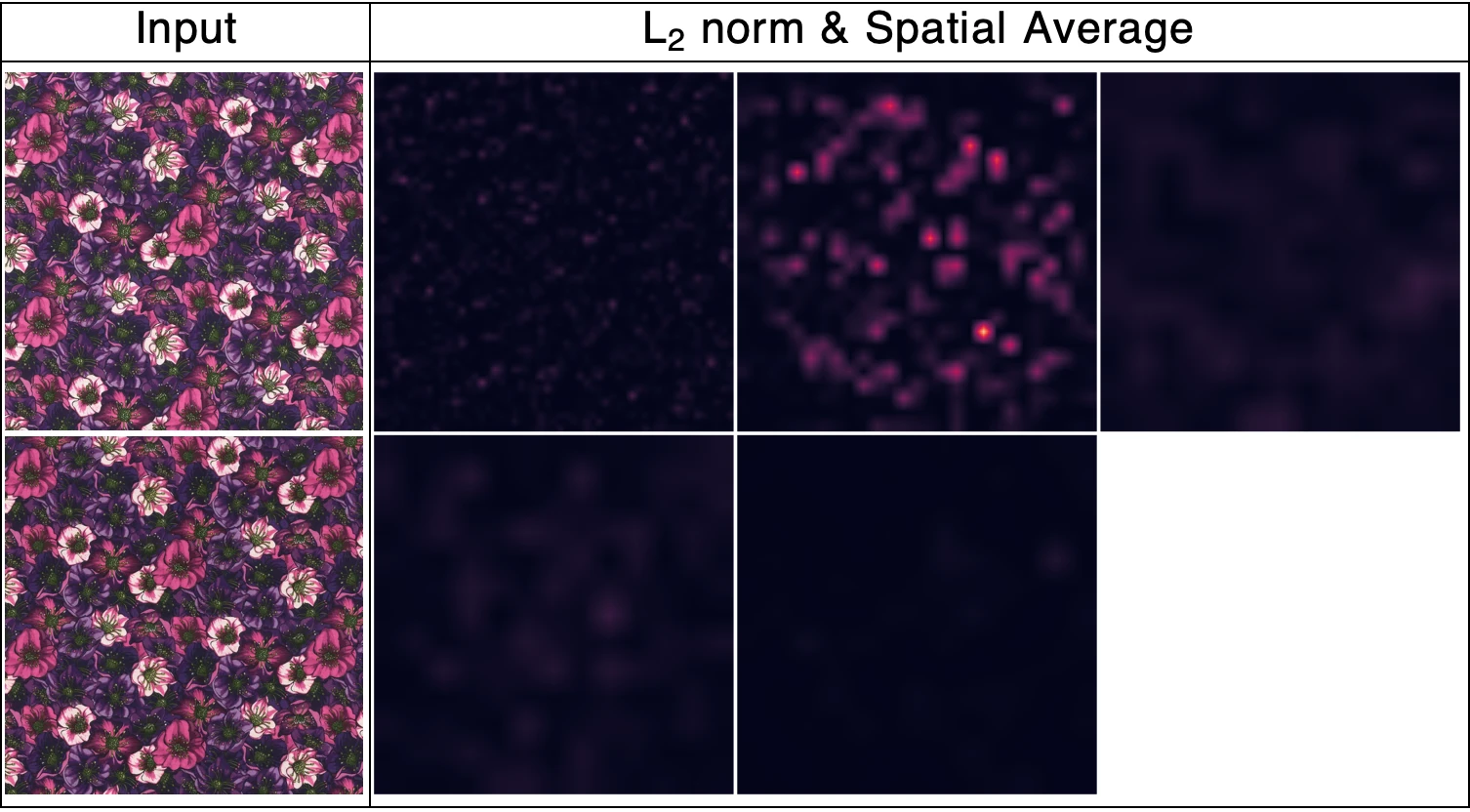

Fig. 2 provides an example with AlexNet. When the input images exhibit visual differences, as in Fig. 2 (a), the spatial average result differs significantly from that of the similar input images in Figure Fig. 2 (b).

The following shows the spatial average results from ReLU activation layers in AlexNet, based on visually (a) dissimilar and (b) similar input images.

(a)show hide

(b)show hide

While this method is proven to be effective in finding similar images, it is computationally expensive. Depending on the architecture of the pre-trained network, which may involve multiple convolutional and pooling layers, the runtime may be significantly increased. In addition, we will need additional memory to store all feature maps from activation layers.

3. Experiments

3.1. Dataset

We collected 113 digital images of fabric with dimensions of 30 cm x 42 cm (3500 x 4200 pixels), as shown in Fig. 3. To test the algorithm’s ability to retrieve similar images, we randomly select images from the dataset, add spatial pixel shifts, and then put them back into the dataset. We then test whether the algorithm can retrieve them.

The dataset is used in experiments

3.2. Result

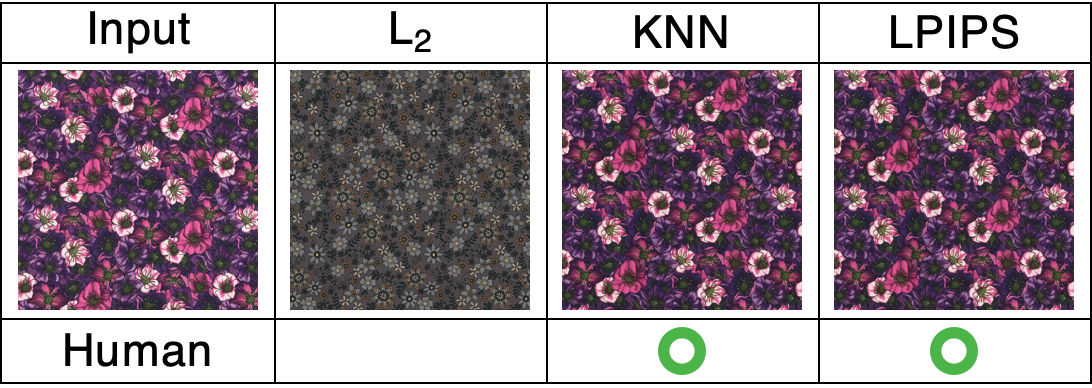

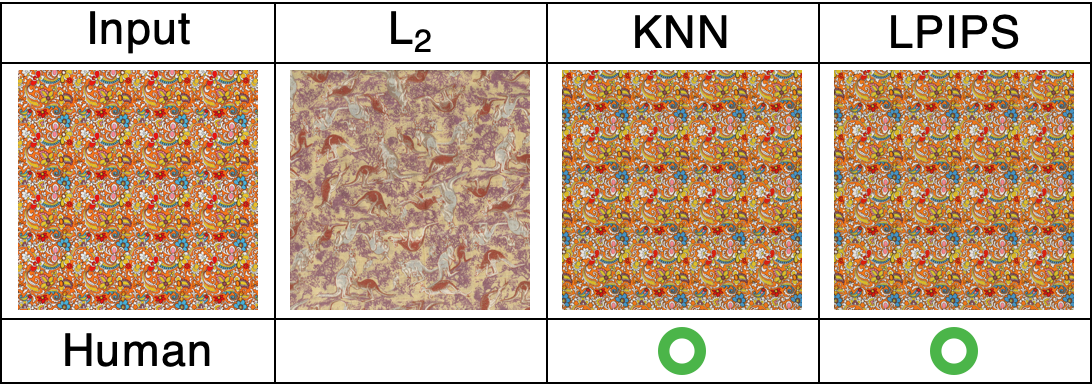

As shown in the Fig. 4, the use of per-pixel measures ($L_2$) may fail to retrieve images with spatial pixel shift. This is because even a small shift can result in a large difference. In contrast, KNN and LPIPS rely solely on extracted features and can therefore successfully find those images.

Per-pixel measures ($L_2$) fail to retrieve images with spatial pixel shift, because even small shifts can generate large differences.

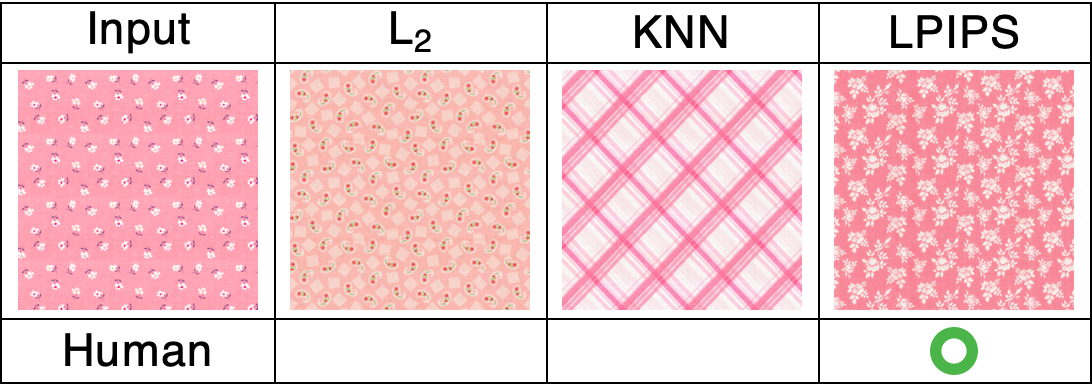

However, due to the lack of spatial information in features, KNN might still fail to find images that are similar from a human perspective. As shown in the Fig. 5, the KNN algorithm retrieves images that are similar to the input image only in terms of colors. However, the context and structure of these images are entirely different.

Although the color of the retrieved image from KNN may be similar to the input, the structure and context can be different. Therefore, KNN may fail to find similar images due to the lack of spatial information in the features.

As previously discussed, in terms of runtime (as shown in the Fig. 6), LPIPS is the most computationally expensive of the three algorithms, taking up to 8.3 seconds per image on our dataset.

Speed (images per second) is displayed on a logarithmic scale to compare different algorithms, with human processing used as the baseline.

name

1x

name

1x

name

1x

name

1x

4. Application

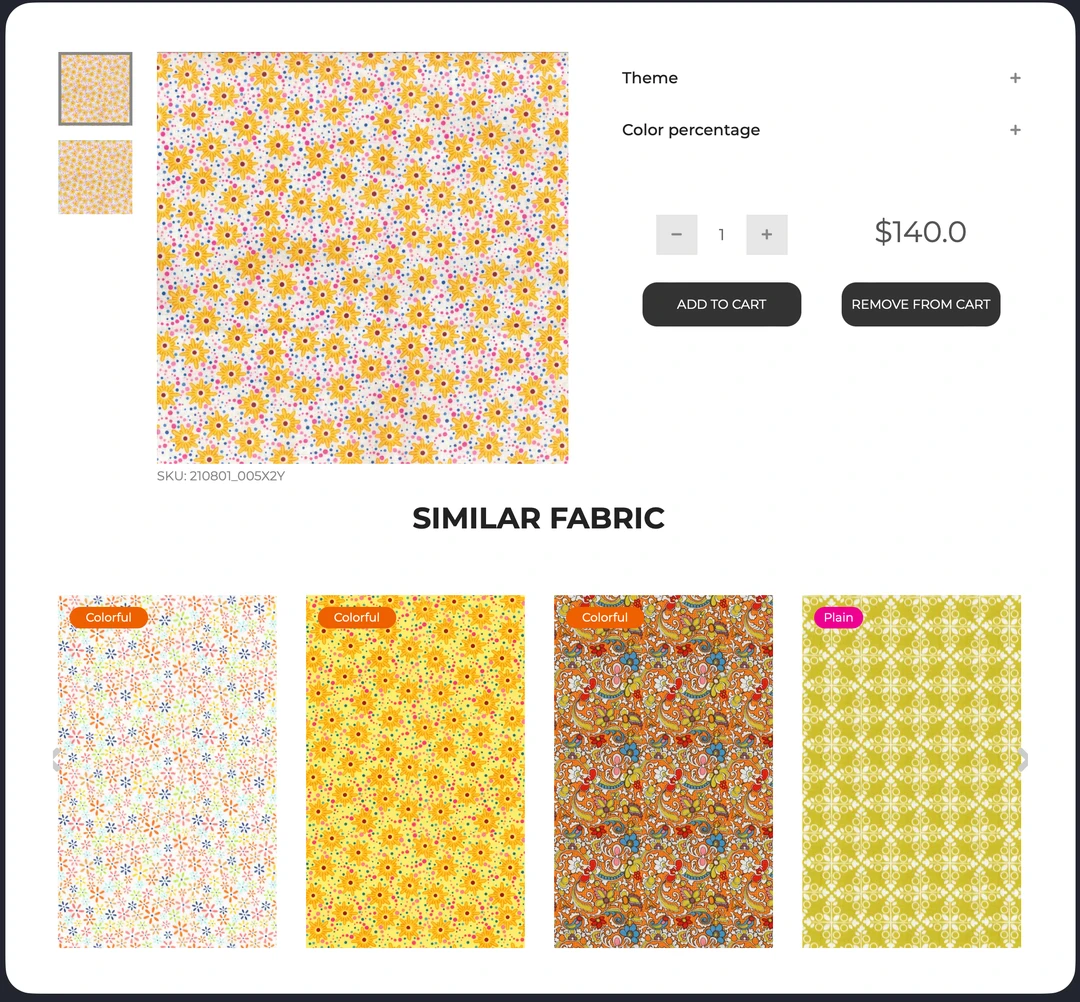

To improve the efficiency of fabric searching, LPIPS with AlexNet has been implemented into the in-house library, as shown in the Fig. 7. When a new image is imported, the algorithm retrieves similar fabrics and stores them in a database.

We have used LPIPS with AlexNet in our in-house library to retrieve similar fabric.

5. Conclusion

Three algorithms were investigated in this project. The per-pixel measure ($L_2$) did not meet our requirements due to its sensitivity to pixel shift. Similarly, KNN is not applicable since the features lack spatial information. LPIPS provides a better way to retrieve similar fabric as perceived by humans, but its computational expense becomes a main obstacle when the number of images increases.

Reference

- Zhang, Isola, Efros, Shechtman & Wang (2018)

- Zhang, R., Isola, P., Efros, A., Shechtman, E. & Wang, O. (2018). The unreasonable effectiveness of deep features as a perceptual metric. Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.

- Krizhevsky (2014)

- Krizhevsky, A. (2014). One weird trick for parallelizing convolutional neural networks. arXiv preprint arXiv:1404.5997.